Our approach to digitalization

At opensight, our approach and philosophy towards digitization are anchored in the paradigm: every company is a software company

Philippe Braxmeier

"Every company is a software company"

Companies are realizing that to compete and grow in a digital world, they must look, think, and act like software companies themselves. Many contemporary enterprises engage in data and data processing activities, necessitating the utilization and/or construction of a comprehensive software stack.

In our considered view, digitization constitutes an ongoing journey that revolves around three core principles (the three ways), which we will delve into more comprehensively:

-

1. Constant Flow of Work

Modern Application Architecture - Fast Release Cycles, Fast Time To Market -

2. Feedback Loops

Site Reliability Engineering - Measuring Performance. Providing Stable and Reliable Software -

3. Constant Improvement

Experimentation - Improvement, Innovation, Interaction

The Phoenix Project - Gene Kim

1. Constant Flow of Work

Roman Hüsler

"The constant delivery of working software is the primary measure of progress. Modern Application Architectures facilitate swift release cycles and rapid time to market for your new procuts features."

How does your business undergo transformation through digitization, what can we learn from other software companies? We've had the privilege of witnessing significant technological shifts over the years.

For software companies, the ability to swiftly respond to evolving customer needs and create innovative offerings is crucial. Ultimately, this necessitates achieving a delicate balance between rapid release cycles on one hand and maintaining a stable and reliable software operation on the other. Delivering ten new software releases per day while ensuring a consistent and dependable operational environment was unattainable with traditional software architectures. We need a software architecture that enables a constant "flow of work", essentially a pipeline for effortless and continuous integration of new features from development into production. Let's examine the transformations that have occurred in the past two decades concerning software architectures.

Devops

Microservices

Containers

Hyperscaler

Agile

N-Tier

Server Virtualisation

Hosted IaaS

Waterfall

Monolothic

Physical Server

Data Center

In older software architecture models like waterfall development, characterized by slow release cycles (e.g., one release per year), there was a notable risk of dedicating extensive effort to features that might not align with customer interests upon release. To address this, the development process evolved towards more agile methodologies. This shift involved maintaining a continuous focus on business demand and customer needs, with frequent testing and integration of new, smaller software features in short iterative sprints. However, the rapid software releases introduced operational challenges, which led to a subsequent phase of development. This phase emphasized a tighter integration between development and operational teams, culminating in the adoption of DevOps practices. This progression represents a transformative journey from traditional waterfall development to agile methodologies and, finally, to the collaborative and efficient practices of DevOps.

In the past, software products were built monolithically, posing challenges for collaboration among developers. Additionally, there was a significant risk of unintentional errors being introduced with each update, affecting other parts of the software. Subsequently, the software was divided into N-Tier architectures, allowing each software component to fulfill its specialized role. This led to advantages such as increased modularity, improved scalability, maintainability, upgradeability, error isolation, and flexibility. The evolution of development continued in the same direction, culminating in the adoption of Microservices. These further amplify the aforementioned benefits, giving rise to highly resilient, decentralized, and scalable application models. These models, facilitated by Continuous Delivery, enable exceptionally fast release cycles.

Containerization allows for more efficient usage of physical resources, which means they are more cost efficient as well. It also brings other significant benefits like portability, scalability, consistency (dev, test, prod parity), better dependency integration and ease of deployment. Containerization was / and is an absolute game changer.

Cloud Infrastructure offers great benefits: Capex vs. Opex - Your financial department will like the benefits of cloud infrastructure, because it can grow with your business essentialy from day zero. No large upfront investments are necessary. We have great scalability and flexibility (zero to global), elasticity (reaction to demand peaks and lows) and cost effectiveness. There is also the benefit of fixed sla's (reliability, redundancy), better security, backup and disaster recovery overall.

2. Feedback Loops

Systems Monitoring provides not just Feedback on wether a system works, it can also provide valuable business insights and insight into long term trends. Also it is a crucial part in every devops pipeline.

Roman Hüsler

"If you're blind to what's happening, you can't be reliable. Your monitoring system should address two questions: what's broken, and why?"

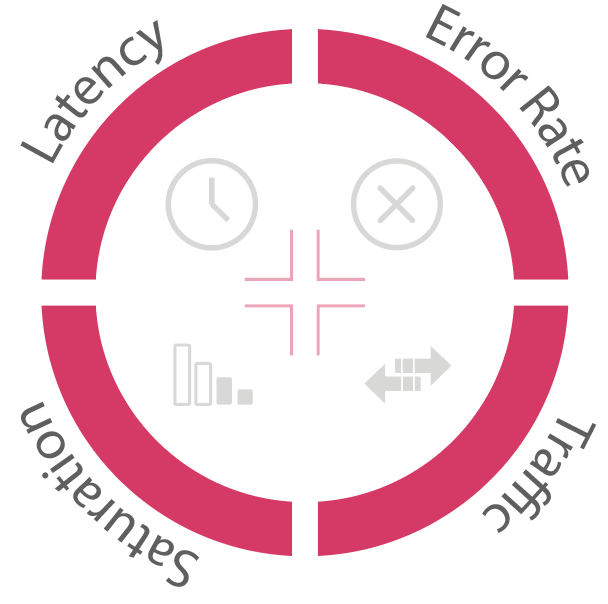

In order to monitor the health of distributed systems we focus especially on "the four golden signals" mentioned in the google sre handbook:

-

Latency

The time it takes to service a request -

Traffic

A measure of how much demand is being placed on your system -

Errors

The rate of requests that fail -

Saturation

How "full" your service is (e.g. memory, io, cpu consumption)