CICD, K8S, Gitlab, Kaniko - Container Builds on a private Kubernetes Cluster

How you can build docker container images on your private kubernetes cluster with a gitlab cicd pipeline.

Usually CI/CD jobs in Gitlab are executed on the shared runners. If you would like to run builds and CI/CD jobs on your own Kubernetes infrastructure, then today’s blog post is for you. We show how you can run container builds on the private Kubernetes cluster.

opensight.ch – roman hüsler

You can build Docker container images with the already very familiar “docker build” command. So you need an appropriate host where the Docker Engine and Command Line Tools are installed. However, what if you would like to run the builds on your existing Kubernetes cluster in a pod? This is what Kaniko is for and that’s what today’s blog post is about.

In a few steps we create a CI/CD pipeline on Gitlab, which should meet the following requirements or the project has the following properties:

- NodeJS project

- Google Container Registry

- Gitlab CI/CD Pipeline

- Gitlab Runner on our local Kubernetes cluster

- Docker builds are run on Kaniko’s own Kubernetes cluster

Of course, we hope that some of the processes described will also help you with your individual setup.

Prerequisites

- We assume that you already have a NodeJS project (or similar) on Gitlab with which to do this setup.

- We assume that you already have a Kubernetes cluster and have configured the HELM CLI tool.

- We assume you already have a container registry, for example on Google Cloud

Contents

- Setup Gitlab Runner

- Dockerfile

- Pipeline Variables, Authentication Container Registry

- CICD Pipeline

- Conclusion - Container Builds on a private Kubernetes Cluster

Setup Gitlab Runner

We don’t want our Gitlab CI/CD pipeline to run on a public node, but locally on our own Kubernetes cluster. Therefore we install a Gitlab Runner on the cluster.

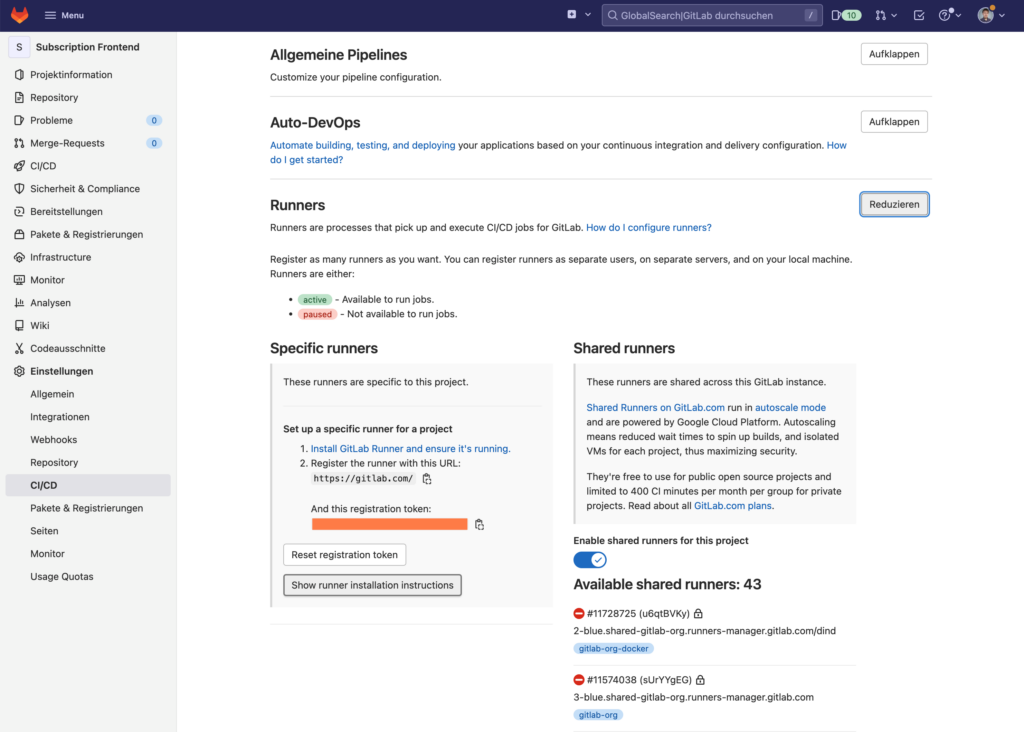

The Gitlab runners are displayed in the Gitlab project under “Settings / CICD”. Also a registration token to register our local runner (oranged out), as well as instructions to install the runner.

According to the instructions, we connect to the Kubernetes cluster (SSH) and add the HELM repository. After that we create a values.yaml with installation parameters and then install the gitlab runner.

# add helm repository

helm repo add gitlab https://charts.gitlab.io

# configure installation parameters

cat << EOF > values.yaml

gitlabUrl: https://gitlab.com

runnerRegistrationToken: xxxxxxxxx

rbac:

create: true

EOF

# gitlab runner installation on k8s

helm install gitlab-runner -f values.yaml gitlab/gitlab-runnerAfter a short wait, the K8S Pod is started and the runner is displayed in the Gitlab. The “Shared Runner” can now be deactivated in Gitlab under “Settings / CICD / Runner”.

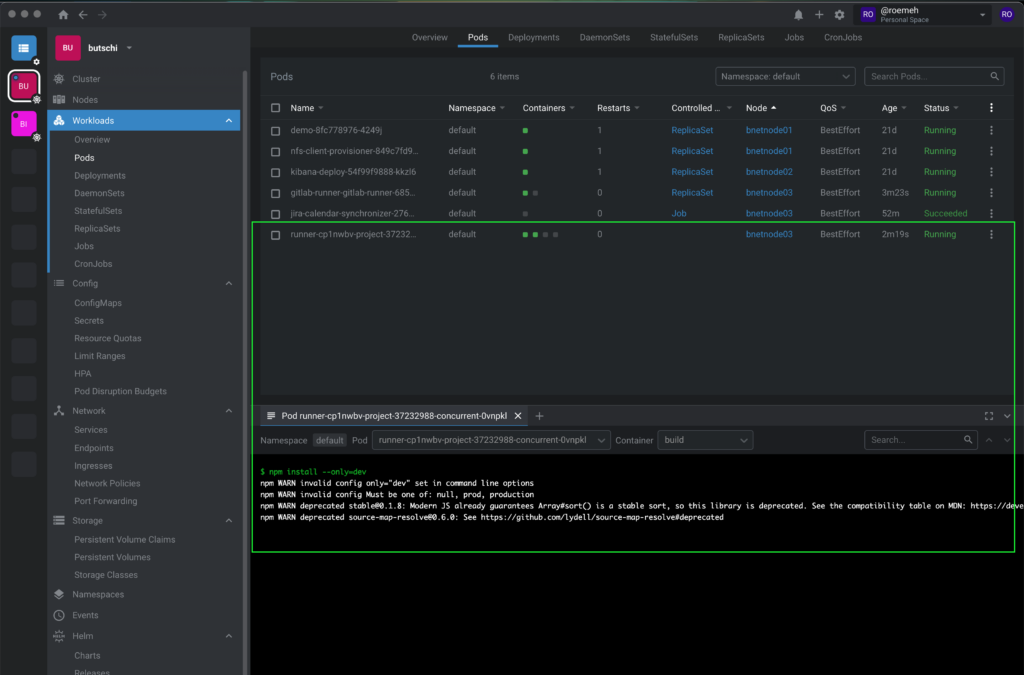

If a CI/CD job is now executed, the Gitlab Runner will automatically start a pod on our Kubernetes cluster, which houses the CI/CD job.

Dockerfile

Our NodeJS project already contains a simple Dockerfile.

FROM node:latest

# Create app directory

WORKDIR /usr/src/app

# Install dependencies (npm is already installed)

COPY package*.json ./

RUN npm install

# Bundle app source

COPY . .

EXPOSE 3000

CMD [ "npm", "start" ]Now we want to configure the Gitlab CI/CD pipeline in such a way that CI/CD jobs are executed on our Gitlab Runner (i.e. on our own Kubernetes cluster) and Docker container images / builds are made using Kaniko.

Pipeline Variables, Authentication Container Registry

So that the pipeline can access the container registry and upload the generated container image, we should first define some variables in the Gitlab project.

=> CI_Registry

Path to container registry i.e. on google cloud

z.B. "eu.gcr.io"

=> (CI_REGISTRY_USER)

username for container registry access

=> (CI_REGISTRY_PASSWORD)

password for container registry access

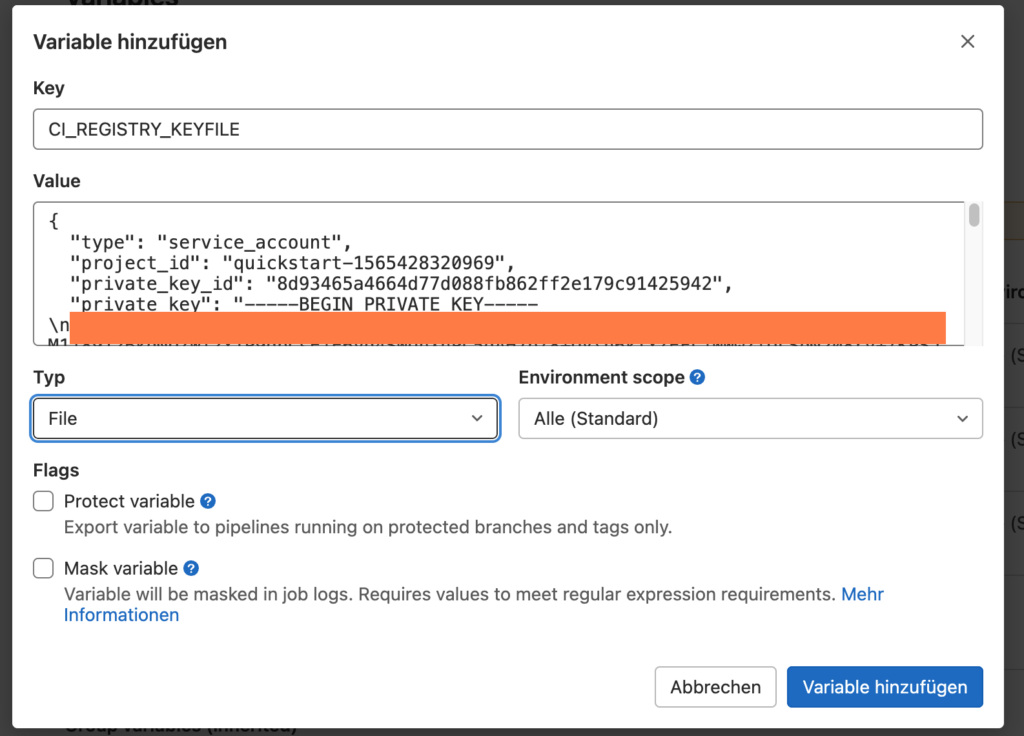

=> CI_REGISTRY_KEYFILE

Instead of username / password, we use a json keyfile for the google container registryFrom the variables CI_REGISTRY_USER and CI_REGISTRY_PASSWORD we can create a Docker config.json in the CI/CD pipeline for Kaniko, which contains the credentials for connecting to the container registry.

mkdir -p /kaniko/.docker

echo "{\"auths\":{\"${CI_REGISTRY}\":{\"auth\":\"$(printf "%s:%s" "${CI_REGISTRY_USER}" "${CI_REGISTRY_PASSWORD}" | base64 | tr -d '\n')\"}}}" > /kaniko/.docker/config.jsonHowever, in this case today, we are using the Google Container Registry and using a JSON key file to authenticate with the Container Registry. A slightly different approach is therefore necessary.

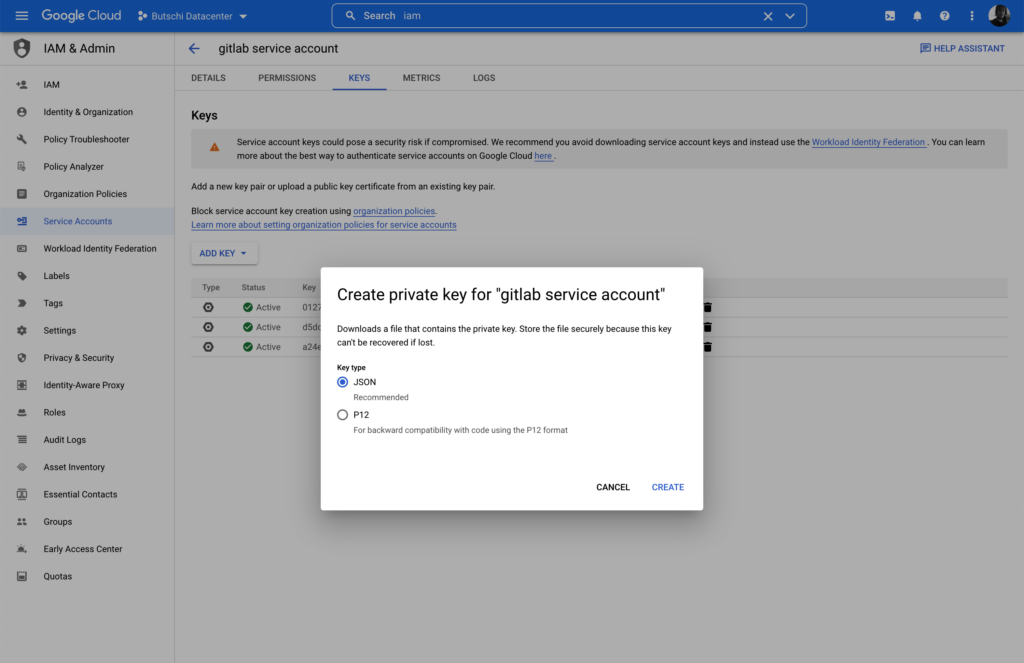

We create a service account (“gitlab-service-account”) with “Storage Administrator” role in the Google Cloud (IAM & Admin). Then we export the keyfile.json of the account.

We store the file in the Gitlab project as a “File” variable “CI_REGISTRY_KEYFILE”.

From the key file we later configure the authentication file for Kaniko in the CI/CD pipeline:

echo "{\"auths\":{\"${CI_REGISTRY}\":{\"auth\": \"$(echo _json_key: $(cat $CI_REGISTRY_KEYFILE) | base64 | tr -d '\n')\"}}}" > /kaniko/.docker/config.jsonThe Gitlab project should now look like this, so that an active runner is visible under “Settings / CICD”. The “Shared Runner” can be deactivated at the same place. In addition, the variables CI_REGISTRY and CI_REGISTRY_KEYFILE are defined, where CI_REGISTRY_KEYFILE is a variable of the “File” type.

CI / CD Pipeline

Add a “.gitlab-ci.yml” file to the project, in which the CI/CD pipeline is defined.

In the file we define in the “Build Stage” that container images should be created according to the Dockerfile (by Kaniko) and then uploaded to the Google Container Registry.

# build will run on this image on gitlab

image: docker:latest

services:

- docker:dind

variables:

GKE_PROJECT: quickstart-12345678

IMAGE_NAME: my-container-image-name

APP_VERSION: 1.0

stages:

- build

# use "docker in docker" for image build

build:

stage: build

image:

name: gcr.io/kaniko-project/executor:debug

entrypoint: [""]

script:

# hide the kaniko aws error

- |

export AWS_ACCESS_KEY_ID="none"

export AWS_SECRET_ACCESS_KEY="none"

export AWS_ACCAWS_SESSION_TOKENESS_KEY_ID="none"

# container registry credentials

- mkdir -p /kaniko/.docker

- echo "{\"auths\":{\"${CI_REGISTRY}\":{\"auth\": \"$(echo _json_key: $(cat $CI_REGISTRY_KEYFILE) | base64 | tr -d '\n')\"}}}" > /kaniko/.docker/config.json

- cat /kaniko/.docker/config.json

# build and push container image using kaniko

- >-

/kaniko/executor

--context "."

--dockerfile "./Dockerfile"

--destination "${CI_REGISTRY}/${GKE_PROJECT}/${IMAGE_NAME}:${APP_VERSION}"

--destination "${CI_REGISTRY}/${GKE_PROJECT}/${IMAGE_NAME}:latest"Container Builds on a private Kubernetes Cluster

Now you can run container builds on the private Kubernetes cluster and run Docker builds using Kaniko.