Deploying Kafka in Production: From Zero to Hero (Part 4)

Learn to build a secure, scalable Kafka cluster on GCP with OAuth 2.0 authentication using Keycloak. This guide covers Strimzi OAuth libraries, custom Docker images, and enterprise-grade access control for production-ready messaging.

Kafka in Production - We are Building a Scalable, Secure, and Enterprise Ready Message Bus on Google Cloud Platform

opensight.ch - roman hüsler

Table of Contents

- Part 1 - Kafka Single Instance with Docker

- Part 2 - Kafka Single Instance with Authentication (SASL)

- Part 3 - Kafka Cluster with TLS Encryption, Authentication and ACLs

- Part 4 - Enterprise Authentication and Access Control (OAuth 2.0)

- Part 5 - Google Cloud Production Kafka Cluster with Strimzi

- Part 6 - Kafka Strimzi Observability and Data Governance (upcoming)

TL;DR

Although I recommend reading the blog posts, I know some folks skip straight to the “source of truth”—the code. It’s like deploying on a Friday without testing.

So, here you go:

Introduction

Apache Kafka is a powerful event streaming platform, but running it in production is a different challenge altogether. We will start with the basics, but this is not a playground—we work our way towards building an enterprise-grade message bus that delivers high availability, security, and scalability for real-world workloads.

I'm not a Kafka specialist myself—I learn on the go. As is often the case with opensight blogs, you can join me on my journey from zero to hero.

In this blog series, we will take Kafka beyond the basics. We will design a production-ready Kafka cluster deployment that is:

- Scalable – Able to handle increasing load without performance bottlenecks.

- Secure – Enforcing authentication (Plain, SCRAM, OAUTH), authorization (ACL, OAUTH), and encryption (TLS).

- Well-structured – Including Schema Registry for proper data governance.

- Enterprise-ready – Implementing features critical for an event-driven architecture in a large-scale environment.

Enterprise Authentication

Enterprises prefer not to manage user accounts and permissions separately in every system they use. Instead, they integrate an Identity Provider (IdP) to centralize authentication and authorization.

In this guide, I’ll walk you through how I configured Keycloak as an Identity Provider for an Apache Kafka cluster. Of course, you can use a different IdP if needed. By integrating Keycloak, we can efficiently manage user authentication and authorization within Kafka, ensuring secure access control.

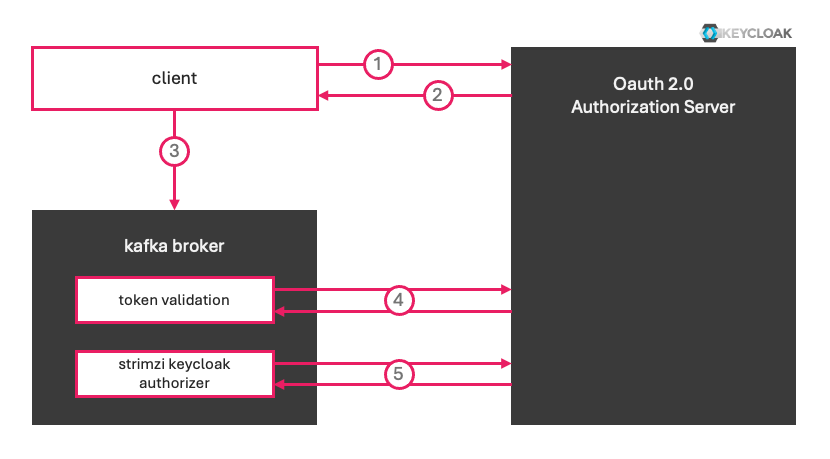

Apache Kafka typically employs the Client Credentials Flow for OAuth 2.0 authentication when integrating with an Identity Provider (IdP) such as Keycloak. Authentication Process:

- Client Authentication:

The client that wants to connect to Kafka first authenticates with the OAuth 2.0 server by providing its client credentials (client ID and secret). - Access Token Retrieval:

Upon successful authentication, the OAuth 2.0 server issues an access token. This token includes the subject name (sub) (e.g.,"client-1") and a list of authorizations (scopes or permissions). - Connecting to Kafka:

The client then uses the access token to establish a connection with the Kafka broker. - Token Validation by Kafka Broker:

The Kafka broker validates the token to ensure its authenticity. In this case, validation is performed by verifying the token's signature. To do so, the broker retrieves the public key from the authorization server’s JWKS (JSON Web Key Set) endpoint. This allows Kafka to confirm that the token was issued by a trusted identity provider. - Strimzi Keycloak Authorizer

(link docs) The Strimzi Keycloak Authorizer will be used on the brokers in this setup. It contacts the OAuth Server every minute to fetch the current authorizations.

In essence, authentication and authorization are delegated to an external Identity Provider (IdP) like Keycloak, rather than being managed directly within the Kafka cluster. This approach centralizes identity and access management, allowing administrators to control authentication, authorization, and token policies directly within Keycloak.

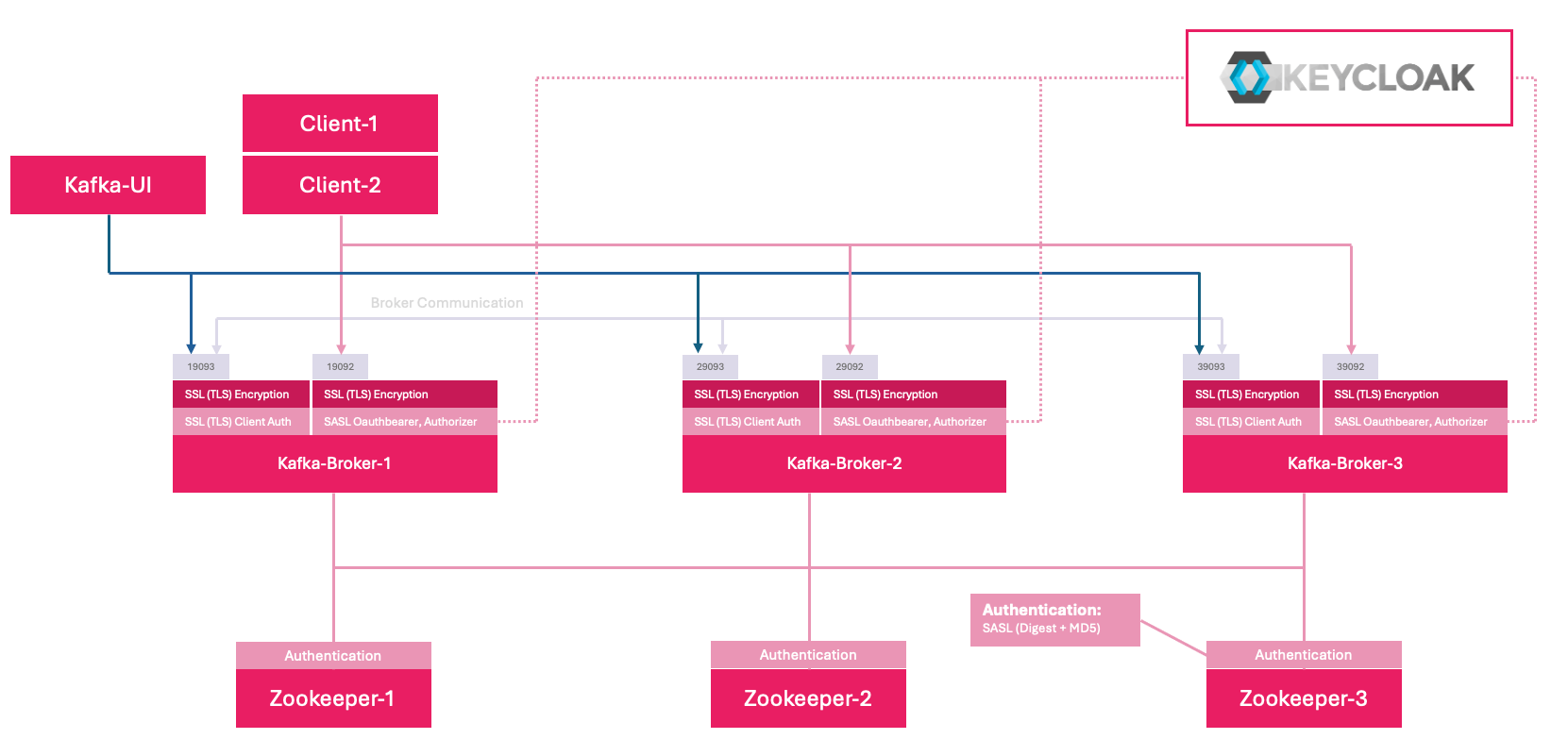

Overview

Our kafka deplyoment now has grown and gets a little more complex, as we integrate the enterprise authentication features. The brokers run 2 ports

- SSL

19093,29093,39093

Encryption and SSL (TLS) Authentication for inter broker communication within the cluster. Also KafkaUI will connect to this internal port. - SASL_SSL (Oauthbearer)

19092,29092,39092

This port is meant to be exposed to the outside world for kafka clients to connect to brokers and authenticate with OAuth 2.0.

Preparations

Before diving into setting up enterprise-style authentication with OAuth 2.0 on Kafka, we first prepare our "mise en place" 🍽️ - ensuring all necessary components are in place for a smooth configuration process.

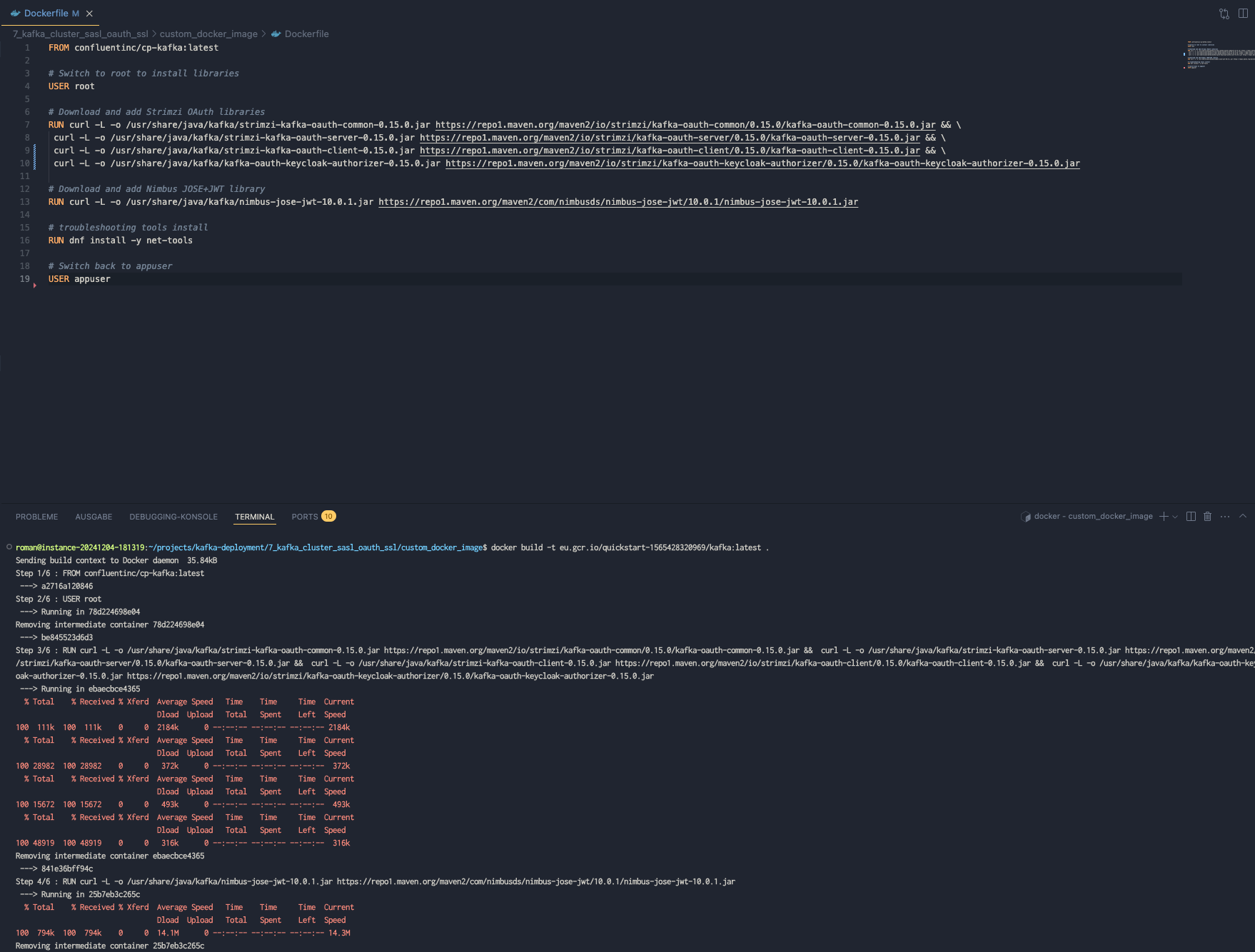

Custom Kafka Docker Image

Apache Kafka® comes with basic OAuth2 support in the form of SASL based authentication module which provides client-server retrieval, exchange and validation of access token used as credentials. For real world usage, extensions have to be provided in the form of JAAS callback handlers which is what Strimzi Kafka OAuth does. So we will not generate ourselfes a nice kafka image with the necessary strimzi oauth libraries.

FROM confluentinc/cp-kafka:latest

# Switch to root to install libraries

USER root

# Download and add Strimzi OAuth libraries

RUN curl -L -o /usr/share/java/kafka/strimzi-kafka-oauth-common-0.15.0.jar https://repo1.maven.org/maven2/io/strimzi/kafka-oauth-common/0.15.0/kafka-oauth-common-0.15.0.jar && \

curl -L -o /usr/share/java/kafka/strimzi-kafka-oauth-server-0.15.0.jar https://repo1.maven.org/maven2/io/strimzi/kafka-oauth-server/0.15.0/kafka-oauth-server-0.15.0.jar && \

curl -L -o /usr/share/java/kafka/strimzi-kafka-oauth-client-0.15.0.jar https://repo1.maven.org/maven2/io/strimzi/kafka-oauth-client/0.15.0/kafka-oauth-client-0.15.0.jar && \

curl -L -o /usr/share/java/kafka/kafka-oauth-keycloak-authorizer-0.15.0.jar https://repo1.maven.org/maven2/io/strimzi/kafka-oauth-keycloak-authorizer/0.15.0/kafka-oauth-keycloak-authorizer-0.15.0.jar

# Download and add Nimbus JOSE+JWT library

RUN curl -L -o /usr/share/java/kafka/nimbus-jose-jwt-10.0.1.jar https://repo1.maven.org/maven2/com/nimbusds/nimbus-jose-jwt/10.0.1/nimbus-jose-jwt-10.0.1.jar

# troubleshooting tools install

RUN dnf install -y net-tools

# Switch back to appuser

USER appuserdockerfile - custom kafka image with strimzi oauth libraries

The image was generated successfully and uploaded to my private docker repository.

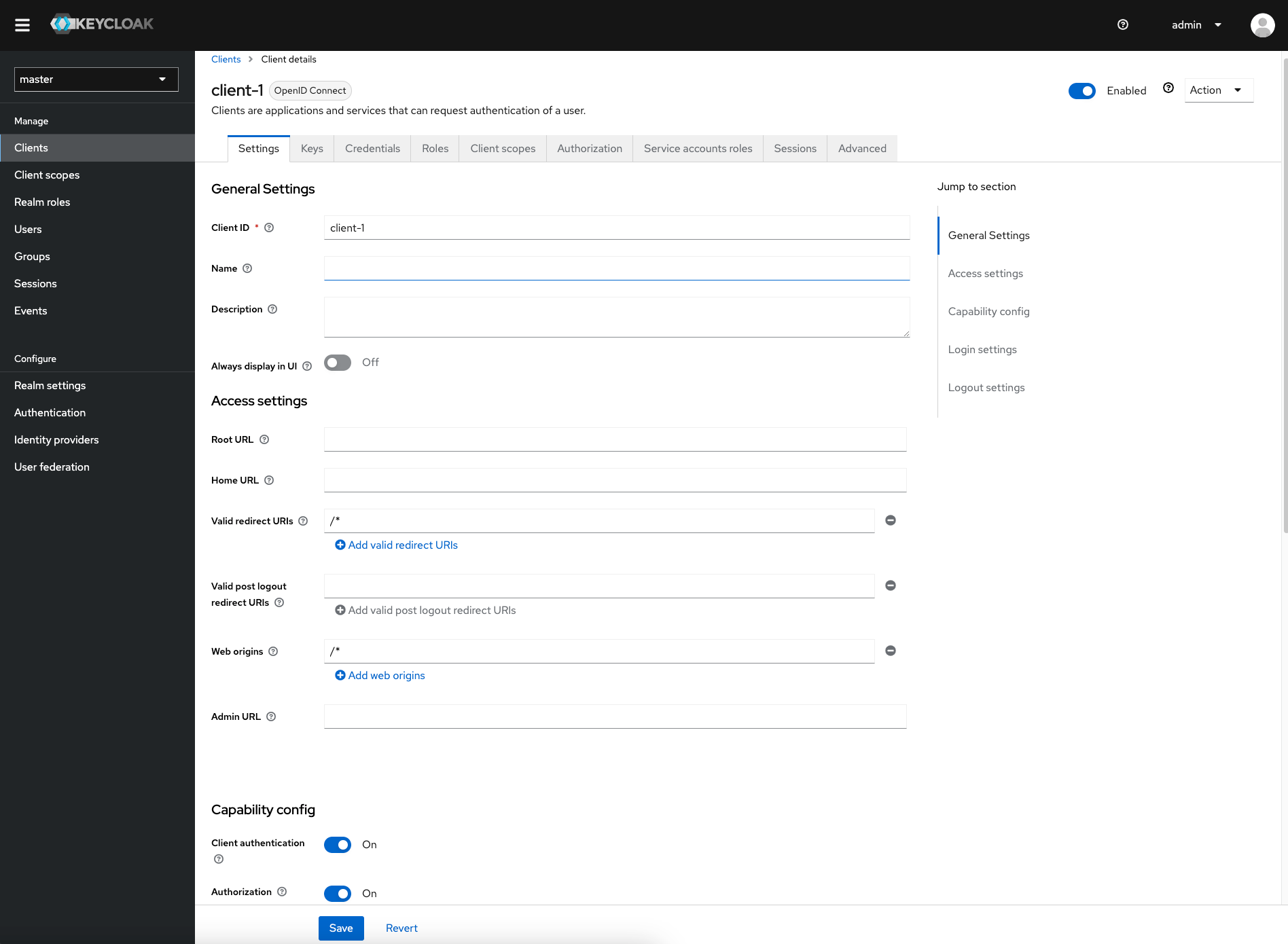

Keycloak Setup

For this blog series, I run a Keycloak server in a Docker container. However, you can use any other Identity Provider of your choice. Keycloak itself is not the main focus of this series—I will simply share my Keycloak configurations that handle authentication and authorization for Kafka.

We prepare already some clients and authorizations that we can use later for testing:

- client-1

Created a "client-1" for testing. Enableclient authenticationandauthorization. Copy theclient secretin the credentials tab for later. - client-2

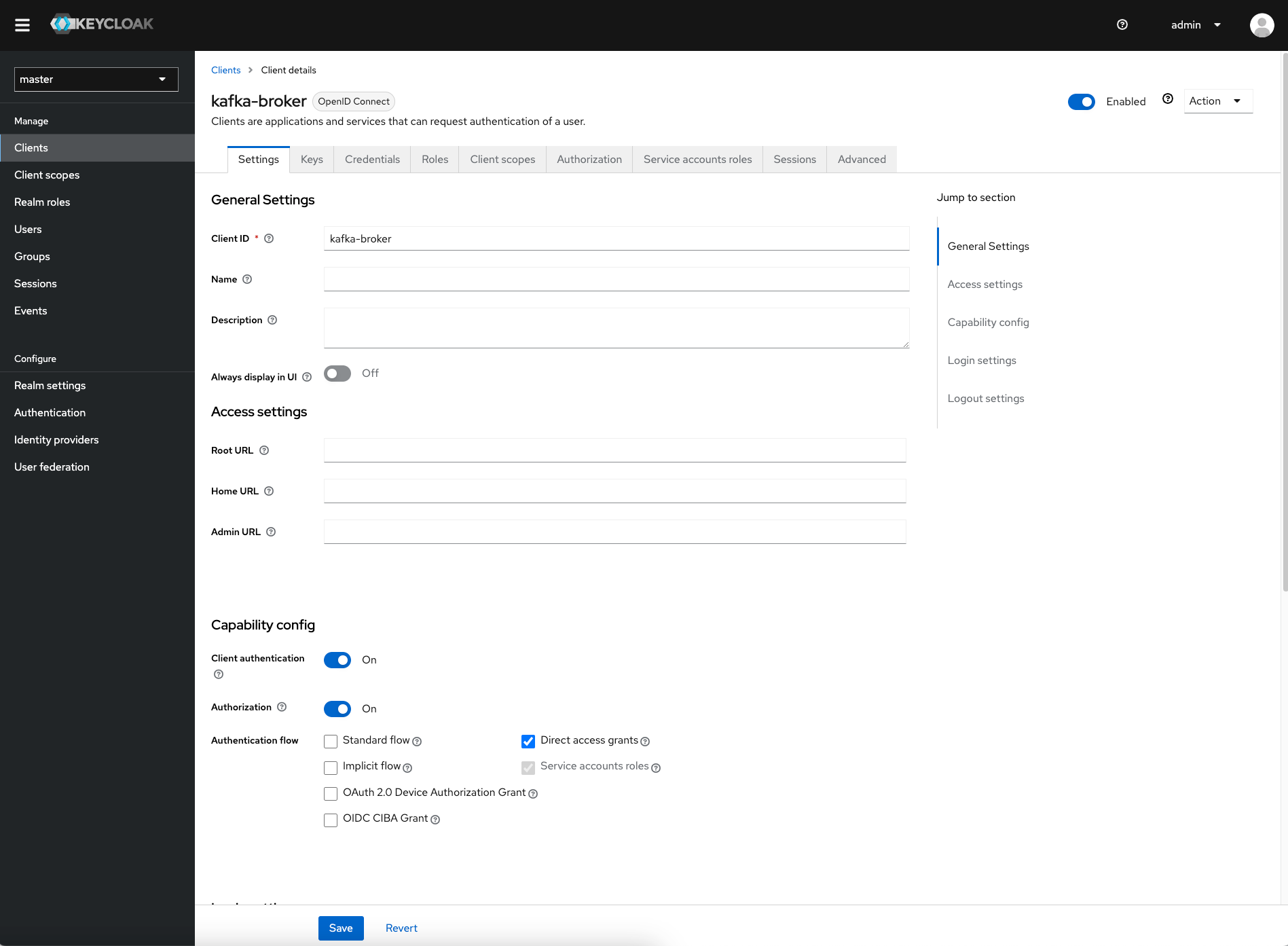

Created a "client-2" for testing. Enableclient authenticationandauthorization. Copy theclient secretin the credentials tab for later. Same config as client-1, except the name. - kafka-broker

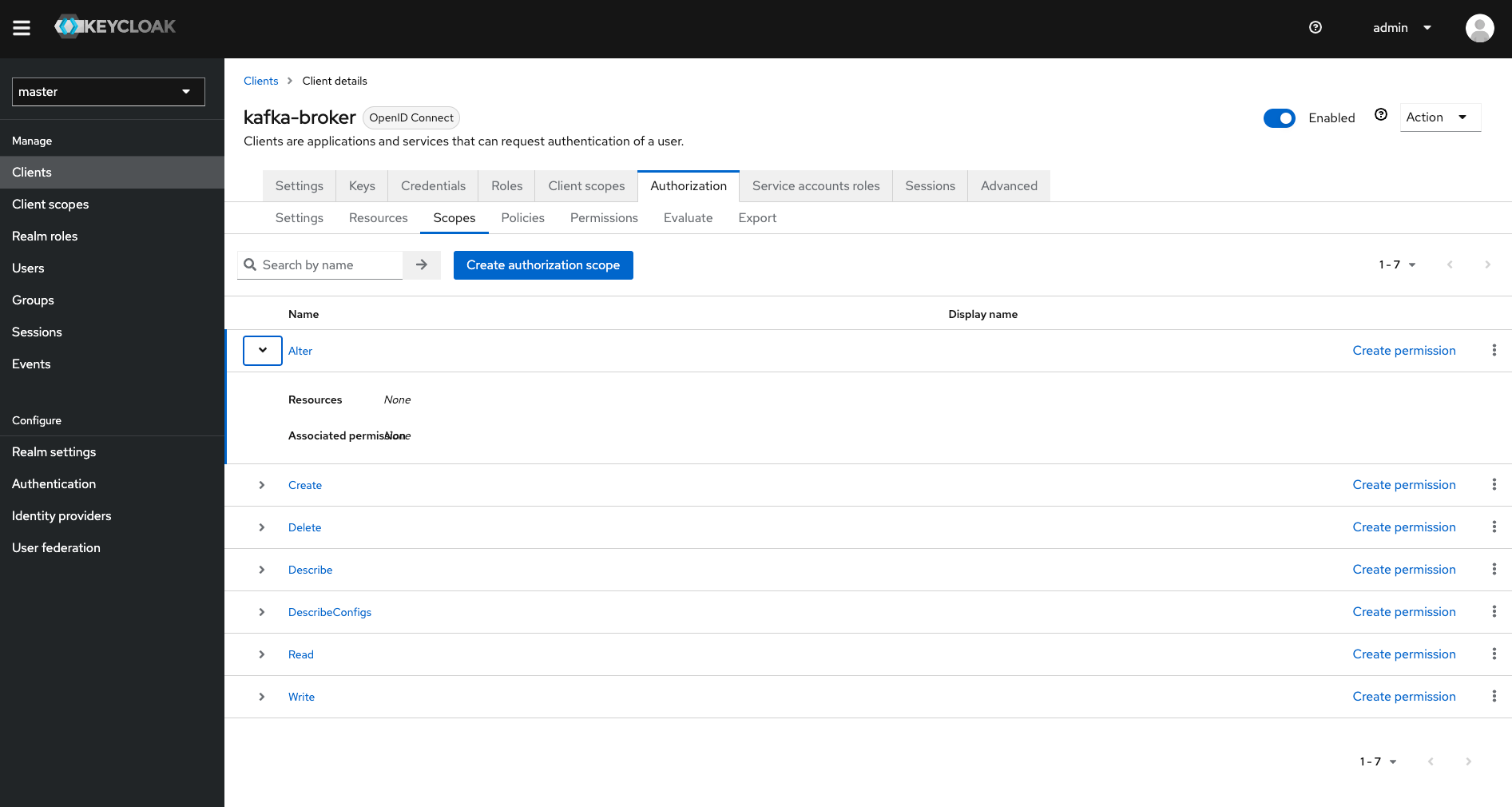

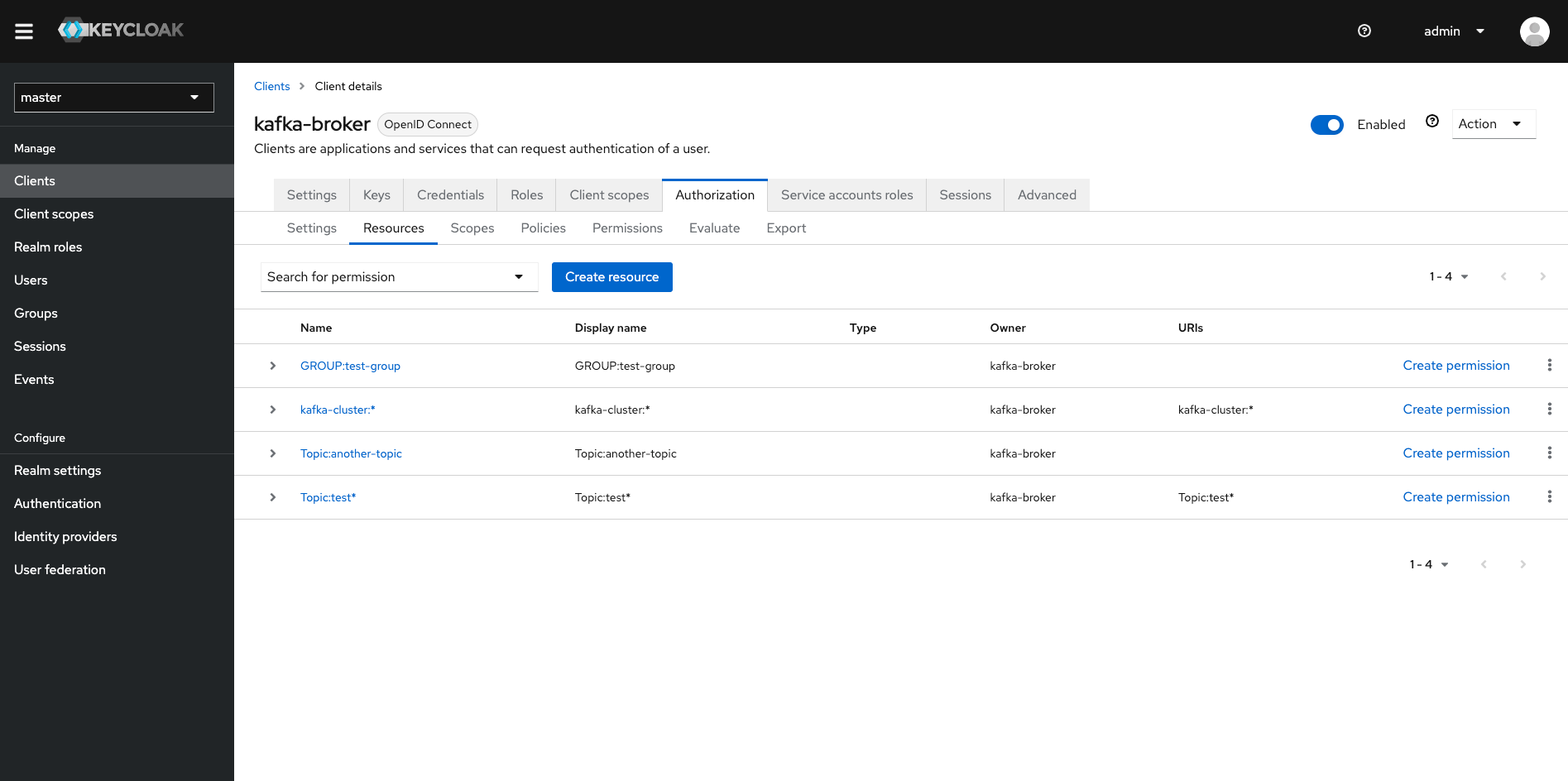

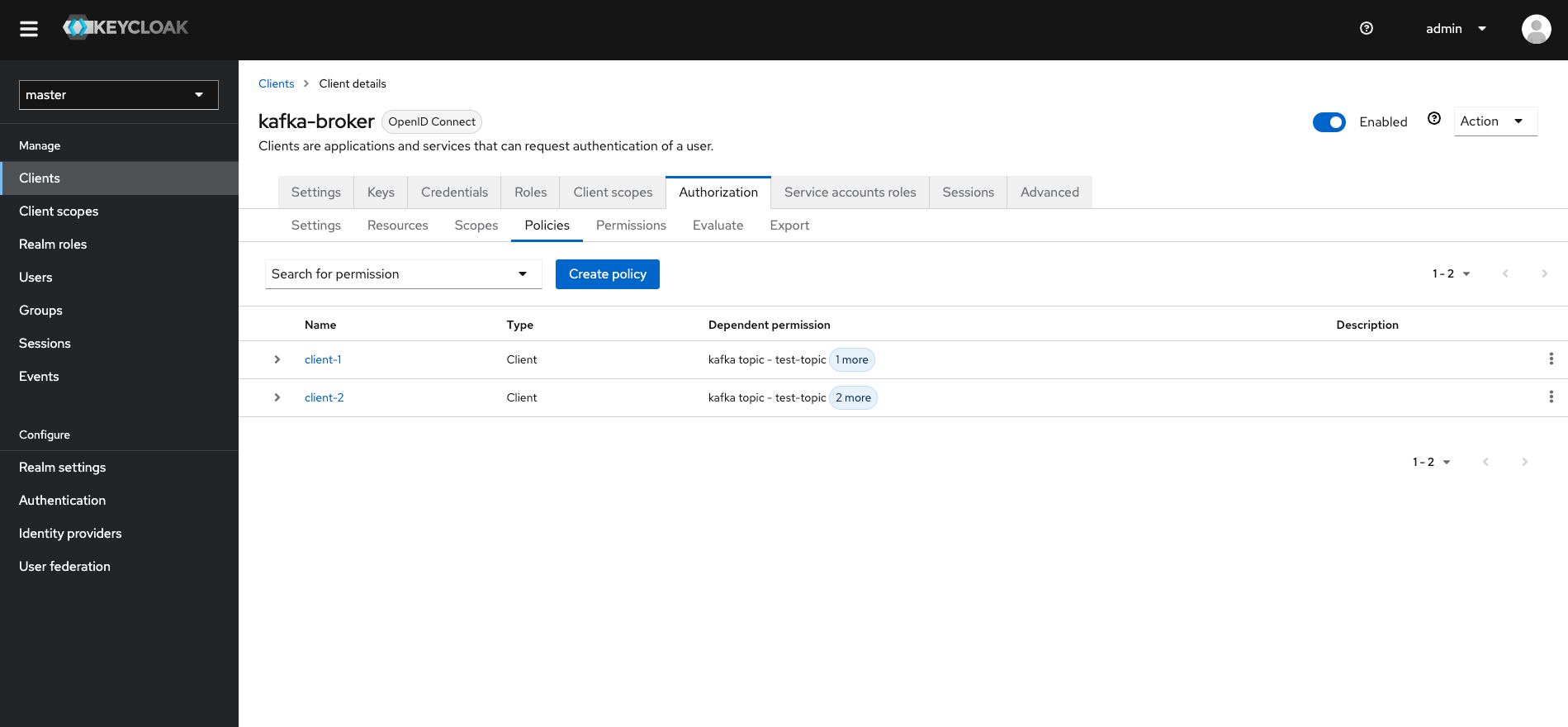

Created a "kafka-broker" client, so that kafka brokers can retrieve the authorizations from the keycloak server. The broker client should also haveauthorizationenabled. Also make note of theclient secret. In the authorization tab, I configured thescopesaccording to the guide of the strimzi kafka keycloak authorizer (docs). Then I also configured someresources. I created apolicyof typeclient- one for "client-1" and one for "client-2". Then finally I createdpermissionsas show in the picture.

gallery - keycloak configurations

I have tested retrieval of an authorization token using this:

# get authorization token for client-1

curl -X POST \

-d "grant_type=urn:ietf:params:oauth:grant-type:uma-ticket&client_id=client-1&client_secret=**********&audience=kafka-broker" \

"https://my-keycloakserver.ch/auth/realms/master/protocol/openid-connect/token"bash - get authorization token for client-1

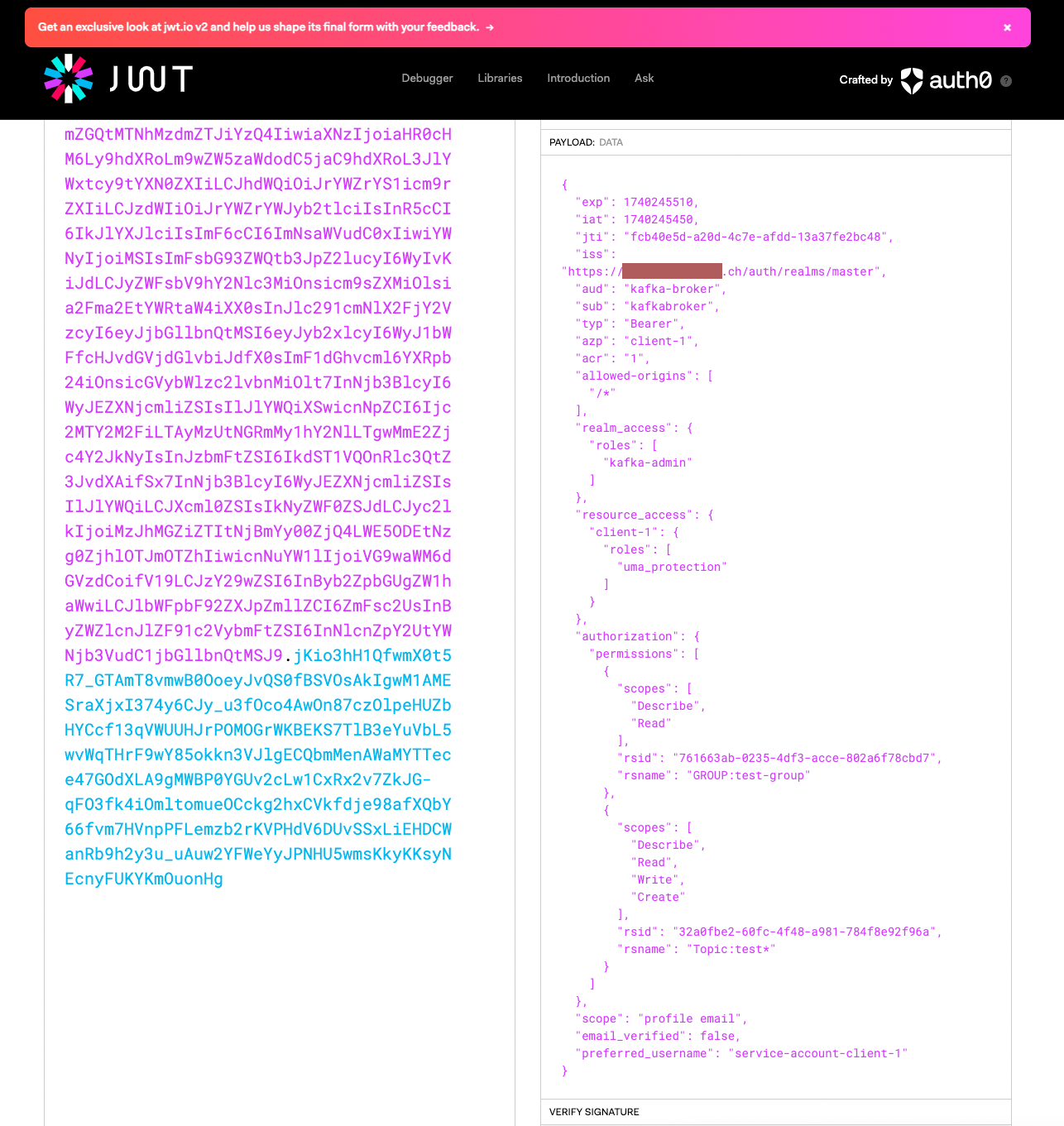

You can inspect the JWT token by pasting it into jwt.io. At this point, the token should resemble the following structure. Pay special attention to the authorization.permissions attribute, as it will be utilized later by the Keycloak authorizer.

In this example, the token grants Describe, Read, Write, and Create permissions for topics matching the pattern test*. Our test client application will specifically interact with the topic "test-topic".

Kafka Deployment

KAFKA_ADVERTISED_LISTENERS:SASLSSL://kafka-1:19092,SSL://kafka-1:19093

We have two listeners for this deployment. SSL with mutual TLS for internal use / internal cluster communication between brokers and also with kafka-ui. Then we have SASL SSL with OAuth for communication with external clients.KAFKA_INTER_BROKER_LISTENER_NAME:SSL

Brokers communication internally using just SSL (TLS)KAFKA_SASL_MECHANISM:OAUTHBEARER:

KAFKA_LISTENER_NAME_SASLSSL_SASL_ENABLED_MECHANISMSOAUTHBEARER

I use the OAUTHBEARER SASL Mechanism for authentication (and authorization) of clientsKAFKA_LISTENER_NAME_SASLSSL_OAUTHBEARER_SASL_SERVER_CALLBACK_HANDLER_CLASS:io.strimzi.kafka.oauth.server.JaasServerOauthValidatorCallbackHandler

Defines the server-side callback handler for OAuth 2.0 authentication. Kafka uses this class (provided by Strimzi) to validate OAuth tokens from clients.KAFKA_LISTENER_NAME_SASLSSL_OAUTHBEARER_SASL_LOGIN_CALLBACK_HANDLER_CLASS:io.strimzi.kafka.oauth.client.JaasClientOauthLoginCallbackHandler

Defines the client-side callback handler for OAuth authentication. Kafka clients use this handler to obtain OAuth tokens before connecting.KAFKA_STRIMZI_AUTHORIZATION_TOKEN_ENDPOINT_URI

KAFKA_STRIMZI_AUTHORIZATION_CLIENT_ID

Those setting are used by the strimzi keycloak authorizer. It connects every minute to the token endpoint to get an updated list (token) of authorizations for the active clients. Therefore it uses its own client idkafka-broker.

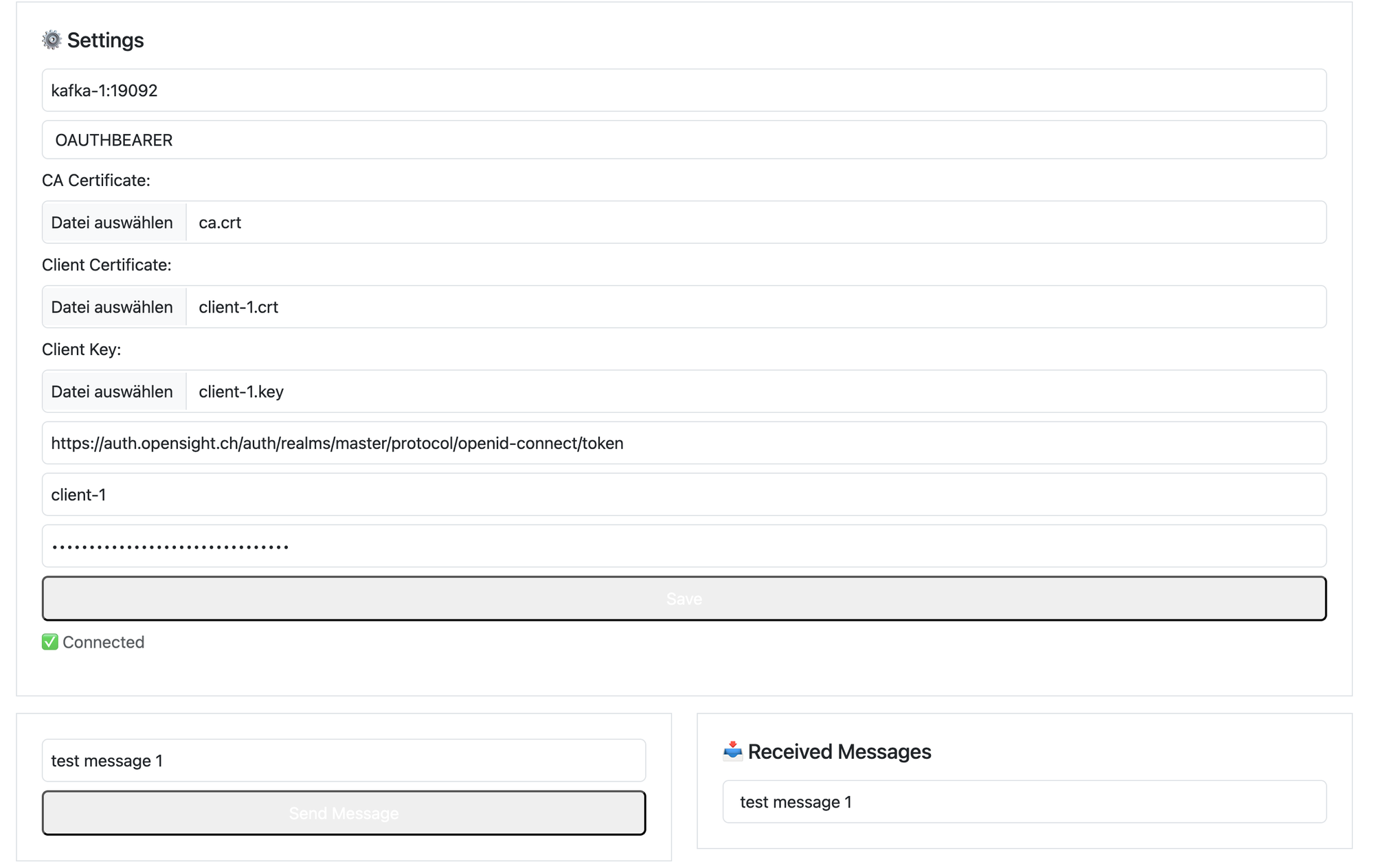

Testing

Once again, the testapp got an update and is now able to connect and authorize via OAuth Bearer. I use the client-1 and its secret, which was generated before in keycloak. The testapp retrieves a token from keycloak, which contains the authorizations that we defined in the "Keycloak Setup" section above.

Conclusion

And voilà—Kafka’s rocking enterprise OAuth 2.0 vibes with Keycloak! Confluent’s team deserves a high-five for their slick (if pricey) tools. Scaling Kafka clusters is no walk in the park, though—I’m not sure we can nail auto data redistribution like they do.

Next up: Kubernetes on GKE. Saddle up for the cluster ride!