Deploying Kafka in Production: From Zero to Hero (Part 2)

Learn how to deploy Apache Kafka in production with SASL/PLAIN authentication for secure client connections. This guide covers configuringproperties, enabling authentication, and verifying with Kafka UI. Build a scalable, enterprise-ready Kafka cluster.

Kafka in Production - We are Building a Scalable, Secure, and Enterprise Ready Message Bus on Google Cloud Platform

opensight.ch - roman hüsler

Table of Contents

- Part 1 - Kafka Single Instance with Docker

- Part 2 - Kafka Single Instance with Authentication (SASL)

- Part 3 - Kafka Cluster with TLS Authentication and ACLs

- Part 4 - Enterprise Authentication and Access Control (OAuth 2.0)

- Part 5 - Google Cloud Production Kafka Cluster with Strimzi

- Part 6 - Kafka Strimzi Observability and Data Governance (upcoming)

TL;DR

Although I recommend reading the blog posts, I know some folks skip straight to the “source of truth”—the code. It’s like deploying on a Friday without testing.

So, here you go:

Introduction

Apache Kafka is a powerful event streaming platform, but running it in production is a different challenge altogether. We will start with the basics, but this is not a playground—we work our way towards building an enterprise-grade message bus that delivers high availability, security, and scalability for real-world workloads.

I'm not a Kafka specialist myself—I learn on the go. As is often the case with opensight blogs, you can join me on my journey from zero to hero.

In this blog series, we will take Kafka beyond the basics. We will design a production-ready Kafka cluster deployment that is:

- Scalable – Able to handle increasing load without performance bottlenecks.

- Secure – Enforcing authentication (Plain, SCRAM, OAUTH), authorization (ACL, OAUTH), and encryption (TLS).

- Well-structured – Including Schema Registry for proper data governance.

- Enterprise-ready – Implementing features critical for an event-driven architecture in a large-scale environment.

SASL Plaintext Authentication

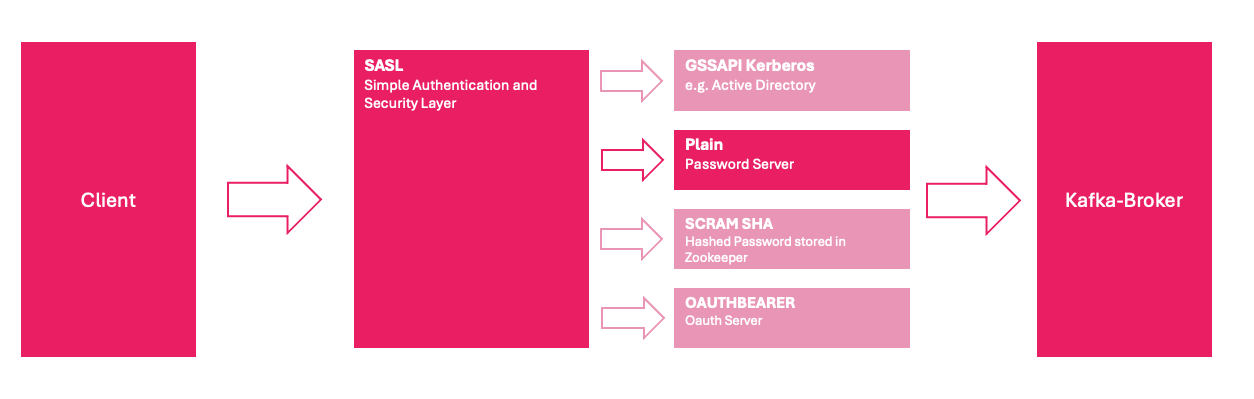

To configure authentication in Kafka, certain modifications must be made to the server.properties configuration file. In this setup, we implement Simple Authentication and Security Layer (SASL) to enforce client authentication in front of the Kafka broker.

SASL provides a flexible framework for authentication and supports multiple mechanisms, allowing integration with different authentication providers. In this blog series, we will explore additional SASL authentication modes in future posts.

Deployment

With SASL Plaintext, the user and passwords will be stored directly in the kafka config.

Configuration Properties

So let's see, which configuration properties we must edit in order to enable SASL Plaintext authentication.

advertised.listeners:PLAINTEXT://kafka:9092,SASL_PLAINTEXT://kafka:9095

(link docs) First, we enable a new listener on port 9095 in order to have the "Simple Authentication and Security Layer (SASL)" enabled.security.inter.broker.protocol:SASL_PLAINTEXT

(link docs) The brokers would also use SASL Authentication in Plaintext to communicate with each other. But as we have just 1 broker, this setting does not really do much.sasl.enabled.mechanisms:PLAIN

(link docs) Enable Plain mechanism for password authenticationZOOKEEPER_SASL_ENABLED:false

The environment variable is specific to the Confluent Kafka image (cp-kafka). When set, it translates to the command-line parameter-Dzookeeper.sasl.client=falseduring Kafka startup. This ensures that Kafka does not attempt to use SASL authentication when communicating with ZooKeeper. In this setup, ZooKeeper operates without authentication.opts:-Djava.security.auth.login.config=/etc/kafka/configs/kafka_server_jaas.conf -Dzookeeper.sasl.client=false

Kafka Startup Options. I configuredzookeeper.sasl.client=falsehere yet again, just in case you might be using another kafka docker image. Also we pass in thekafka_server_jaas.confconfig file, which configures the authentication credentials. (link docs)

KafkaServer {

org.apache.kafka.common.security.plain.PlainLoginModule required

username="kafka"

password="changeit"

user_kafka="changeit";

};

As you can see, a user kafka with the password changeit is configured. This setup is solely for authentication with the Kafka broker. However, we have not yet implemented any Access Control Lists (ACLs) for topic-level authorization. Authorization will be configured at a later stage.

KafkaUI

We have some properties now in KafkaUI, so it can authenticate with the kafka broker. Check the section of kafka-ui in the docker-compose.yml

version: "3.8"

services:

zookeeper:

image: "${ZOOKEEPER_IMAGE}"

container_name: zookeeper

restart: always

environment:

ZOOKEEPER_CLIENT_PORT: 2181

ZOOKEEPER_TICK_TIME: 2000

ZOOKEEPER_MAX_CLIENT_CNXNS: 60

KAFKA_HEAP_OPTS: "-Xms512M -Xmx1024M"

ports:

- "2181:2181"

networks:

- kafka

kafka:

image: "${KAFKA_IMAGE}"

container_name: kafka

restart: always

depends_on:

- zookeeper

environment:

KAFKA_BROKER_ID: 1

KAFKA_ZOOKEEPER_CONNECT: zookeeper:2181

KAFKA_AUTO_CREATE_TOPICS_ENABLE: "true"

KAFKA_OFFSETS_TOPIC_REPLICATION_FACTOR: 1

KAFKA_TRANSACTION_STATE_LOG_MIN_ISR: 1

KAFKA_TRANSACTION_STATE_LOG_REPLICATION_FACTOR: 1

KAFKA_GROUP_INITIAL_REBALANCE_DELAY_MS: 100

KAFKA_ADVERTISED_LISTENERS: "PLAINTEXT://kafka:9092,SASL_PLAINTEXT://kafka:9095"

KAFKA_LISTENER_SECURITY_PROTOCOL_MAP: "PLAINTEXT:PLAINTEXT,SASL_PLAINTEXT:SASL_PLAINTEXT"

KAFKA_SECURITY_INTER_BROKER_PROTOCOL: SASL_PLAINTEXT

KAFKA_SASL_MECHANISM_INTER_BROKER_PROTOCOL: PLAIN

KAFKA_SASL_ENABLED_MECHANISMS: PLAIN

KAFKA_OPTS: "-Djava.security.auth.login.config=/etc/kafka/configs/kafka_server_jaas.conf -Dzookeeper.sasl.client=false"

KAFKA_ZOOKEEPER_CONNECTION_TIMEOUT_MS: 30000

ZOOKEEPER_SASL_ENABLED: "false"

ports:

- "9092:9092"

- "9095:9095"

volumes:

- "./kafka_config:/etc/kafka/configs"

networks:

- kafka

kafka-ui:

image: "${KAFKA_UI_IMAGE}"

container_name: kafka-ui

restart: always

depends_on:

- kafka

ports:

- "8089:8080"

environment:

KAFKA_CLUSTERS_0_NAME: local

KAFKA_CLUSTERS_0_BOOTSTRAPSERVERS: kafka:9095

KAFKA_CLUSTERS_0_PROPERTIES_SECURITY_PROTOCOL: SASL_PLAINTEXT

KAFKA_CLUSTERS_0_PROPERTIES_SASL_MECHANISM: PLAIN

KAFKA_CLUSTERS_0_PROPERTIES_SASL_JAAS_CONFIG: 'org.apache.kafka.common.security.plain.PlainLoginModule required username="kafka" password="changeit";'

networks:

- kafka

networks:

kafka:

driver: bridge

kafka deployment with sasl plaintext authentication

Testing

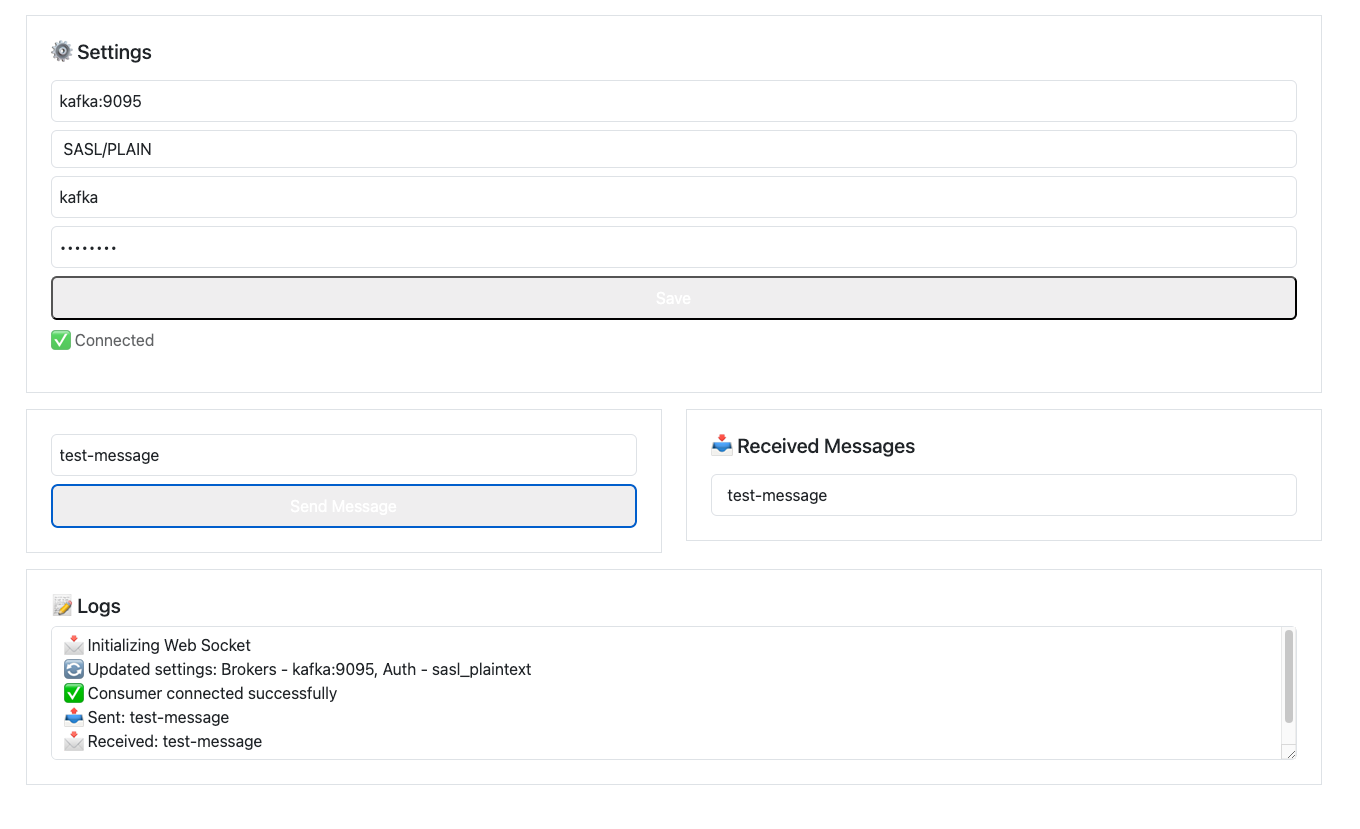

As mentioned in Part 1 of the blog, I highly recommend experimenting with Kafka UI. The connection status appears green, confirming that the setup is working correctly.

Additionally, our test application now includes a new feature: the ability to authenticate using SASL/PLAIN. In this setup, Kafka SASL is exposed on port 9095, and the test application successfully established a connection—further validating our authentication configuration.

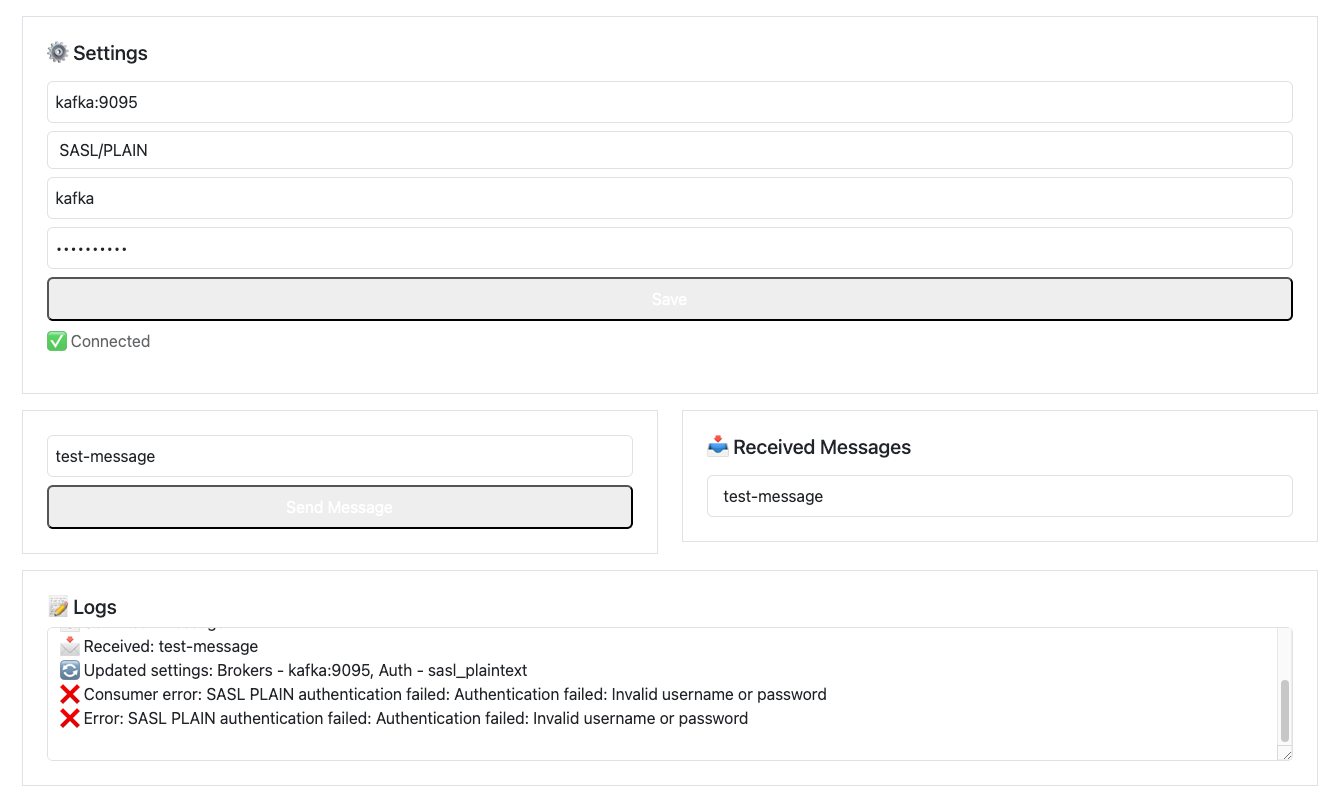

A great way to verify that SASL/PLAIN authentication is correctly configured is by testing incorrect credentials. When specifying a wrong password, Kafka rightfully denies the connection. Similarly, if authentication is disabled by setting it to "none", the connection is also rejected.

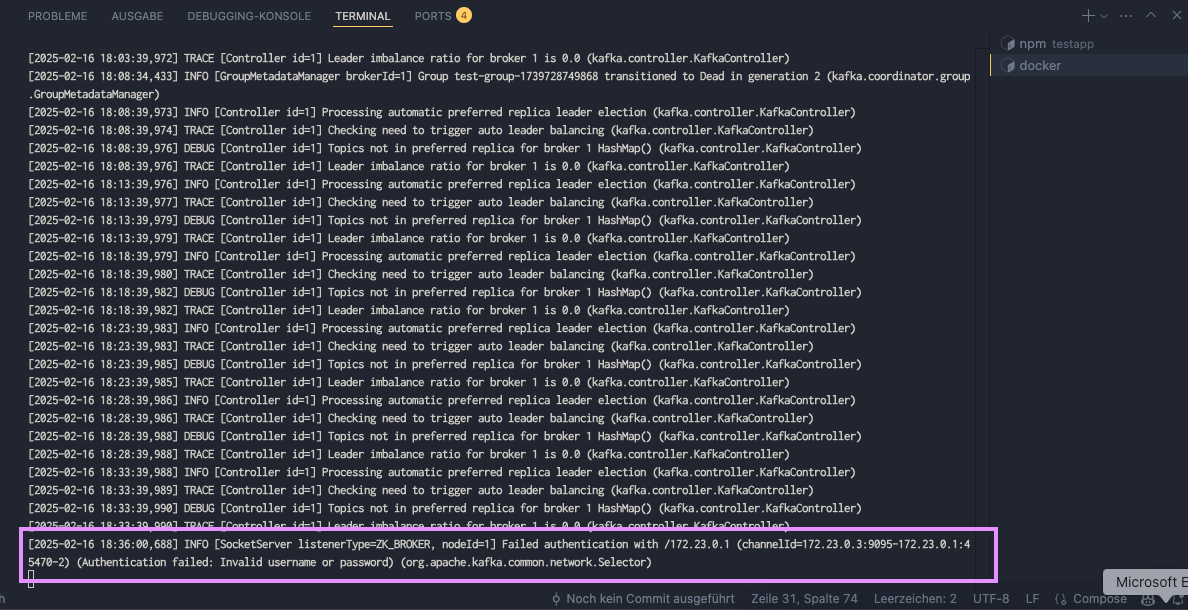

Also check the logs of the kafka container while performing a connection:

docker logs kafka -fchecking kafka container logs

This confirms that SASL/PLAIN authentication is enforced as expected, indicating that our setup is successful!

With that, we conclude this part of the blog series. In Part 3, we will move beyond the single-instance deployment and set up a three-node Kafka cluster. Additionally, we will explore SSL (TLS) for authentication and implement access control (ACL) to enhance security. Stay tuned!